Quora Insincere Questions Classification Using BERT

Fine Tune BERT Model for Text Classification with TensorFlow for Detect Toxicity in Quora Questions

- Overview

- Install TensorFlow and TensorFlow Model Garden

- Download and Import the Quora Insincere Questions Dataset

- Create tf.data.Datasets for Training and Evaluation

- Useful Links

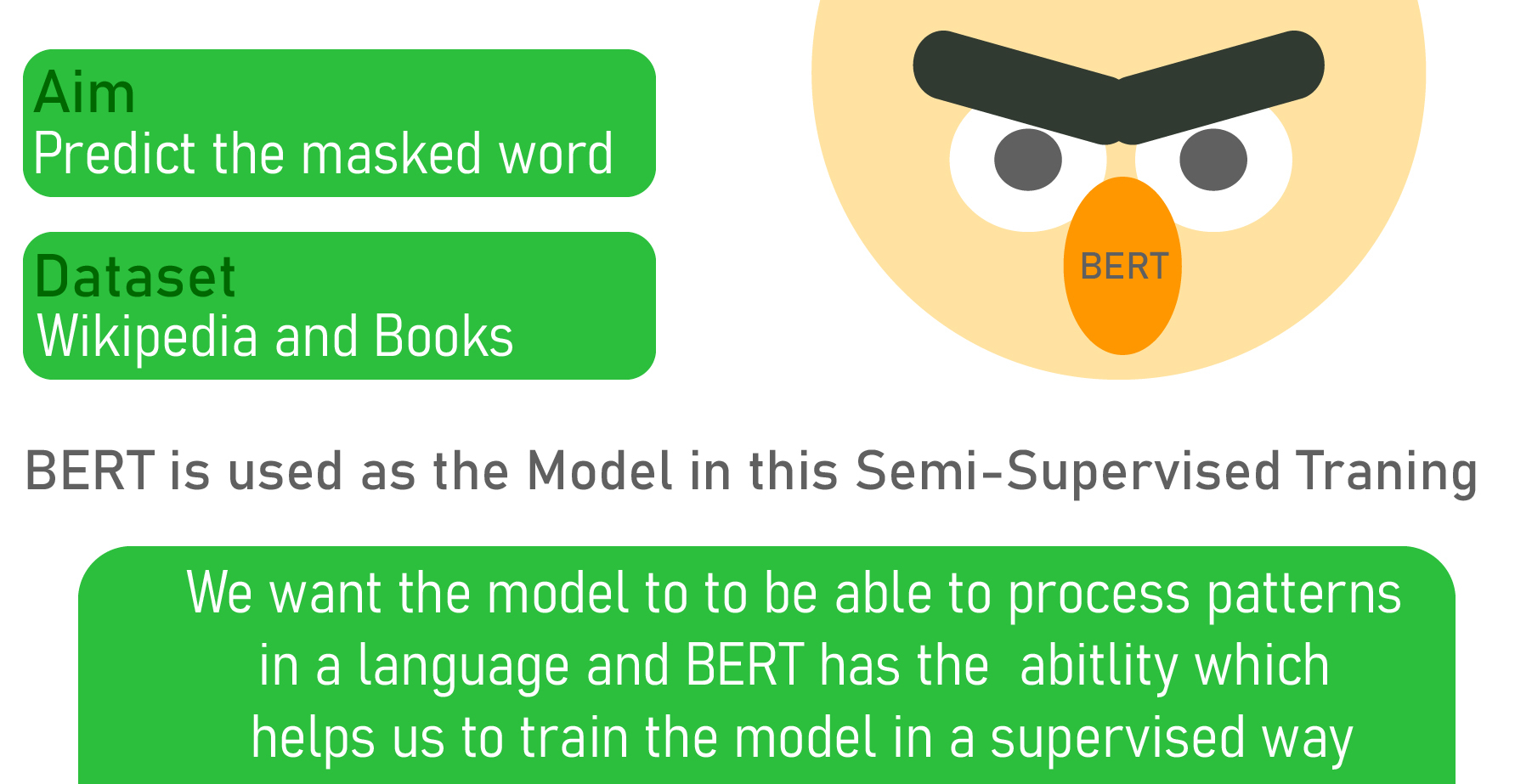

Figure 1: BERT Classification Model

Overview

-

Predicting whether a question asked on Quora is sincere or not.The Model input a Question of Quora and Output "sincere" or "Insincere". So Basically its a Binary Classification problem with Text data.

-

As Quora has a large database and solving this probelm with Recurrent Neural Network (LSTM, GRU, Conv-1D) is little hard, So here we use State-Of-Art Transformer BERT(Bidirectional Encoder Representations from Transformers).

The pretrained BERT model used in this project is available on TensorFlow Hub.

!nvidia-smi

import tensorflow as tf

print(tf.version.VERSION)

!pip install -q tensorflow==2.3.0

!git clone --depth 1 -b v2.3.0 https://github.com/tensorflow/models.git

!pip install -Uqr models/official/requirements.txt

# you may have to restart the runtime afterwards

import numpy as np

import tensorflow as tf

import tensorflow_hub as hub

import sys

sys.path.append('models')

from official.nlp.data import classifier_data_lib

from official.nlp.bert import tokenization

from official.nlp import optimization

print("TF Version: ", tf.__version__)

print("Eager mode: ", tf.executing_eagerly())

print("Hub version: ", hub.__version__)

print("GPU is", "available" if tf.config.experimental.list_physical_devices("GPU") else "NOT AVAILABLE")

A downloadable copy of the Quora Insincere Questions Classification data can be found https://archive.org/download/fine-tune-bert-tensorflow-train.csv/train.csv.zip. Decompress and read the data into a pandas DataFrame.

import numpy as np

import pandas as pd

# reading the dataset from the link or you can download it from kaggle alos

# https://www.kaggle.com/c/quora-insincere-questions-classification/data

df = pd.read_csv('https://archive.org/download/fine-tune-bert-tensorflow-train.csv/train.csv.zip', compression = 'zip',

low_memory = False)

# checking the no of row and columns in the dataset

df.shape

df.head(20)

df.target.plot(kind = 'hist', title = 'target distribution')

As you can see here its a imbalanced dataset, we have to set our split data show that both the target labels should be present in train and test set. So we use stratified sampling to overcome this.

from sklearn.model_selection import train_test_split

train_df , remaining = train_test_split(df, random_state = 42 , train_size = 0.0075, stratify = df.target.values)

valid_df , _ = train_test_split(remaining, random_state= 42 , train_size = 0.00075, stratify =remaining.target.values)

# ensure the shape of both train and test data

train_df.shape , valid_df.shape

As the dataset is preety huge,the whole dataset take much longer to train so we use only a small train and test portion, set a ratio of 90% train and 10% test.Secondly to overcome the io bottleneck we use tf.data.Dataset pipelines.

with tf.device('/cpu:0'):

train_data = tf.data.Dataset.from_tensor_slices((train_df.question_text.values, train_df.target.values))

valid_data = tf.data.Dataset.from_tensor_slices((valid_df.question_text.values, valid_df.target.values))

# reading from tensorflow data pipeline

for text,label in train_data.take(1):

print(text)

print(label)

"""

Each line of the dataset is composed of the review text and its label

- Data preprocessing consists of transforming text to BERT input features:

input_word_ids, input_mask, segment_ids

- In the process, tokenizing the text is done with the provided BERT model tokenizer

"""

# Label categories

label_list = [0,1]

# maximum length of (token) input sequences

max_seq_length = 128

# Define the batch size

train_batch_size = 32

# Get BERT layer and tokenizer:

# BERT details here: https://tfhub.dev/tensorflow/bert_en_uncased_L-12_H-768_A-12/2

bert_layer = hub.KerasLayer("https://tfhub.dev/tensorflow/bert_en_uncased_L-12_H-768_A-12/2",

trainable=True)

vocab_file = bert_layer.resolved_object.vocab_file.asset_path.numpy()

do_lower_case = bert_layer.resolved_object.do_lower_case.numpy()

tokenizer = tokenization.FullTokenizer(vocab_file, do_lower_case)

tokenizer.wordpiece_tokenizer.tokenize('hey, how are you ?')

tokenizer.convert_tokens_to_ids(tokenizer.wordpiece_tokenizer.tokenize('hey, how are you ?'))

We'll need to transform our data into a format BERT understands. This involves two steps. First, we create InputExamples using classifier_data_lib's constructor InputExample provided in the BERT library.

def to_feature(text, label, label_list=label_list, max_seq_length=max_seq_length, tokenizer=tokenizer):

example = classifier_data_lib.InputExample(guid = None,

text_a= text.numpy(),

text_b =None,

label= label.numpy())

feature = classifier_data_lib.convert_single_example(0, example, label_list, max_seq_length, tokenizer)

return (feature.input_ids, feature.input_mask, feature.segment_ids, feature.label_id)

You want to use Dataset.map to apply this function to each element of the dataset. Dataset.map runs in graph mode.

- Graph tensors do not have a value.

- In graph mode you can only use TensorFlow Ops and functions.

So you can't .map this function directly: You need to wrap it in a tf.py_function. The tf.py_function will pass regular tensors (with a value and a .numpy() method to access it), to the wrapped python function.

def to_feature_map(text, label):

input_ids, input_mask, segment_ids, label_id = tf.py_function(to_feature,inp =[text, label],

Tout=[tf.int32,tf.int32,tf.int32,tf.int32])

input_ids.set_shape([max_seq_length])

input_mask.set_shape([max_seq_length])

segment_ids.set_shape([max_seq_length])

label_id.set_shape([])

x = {

'input_word_ids': input_ids,

'input_mask': input_mask,

'input_type_ids': segment_ids

}

return (x,label_id)

with tf.device('/cpu:0'):

# train

train_data = (train_data.map(to_feature_map,

num_parallel_calls =tf.data.experimental.AUTOTUNE)

.shuffle(1000)

.batch(32, drop_remainder = True)

.prefetch(tf.data.experimental.AUTOTUNE))

# valid

valid_data = (valid_data.map(to_feature_map,

num_parallel_calls =tf.data.experimental.AUTOTUNE)

.batch(32, drop_remainder = True)

.prefetch(tf.data.experimental.AUTOTUNE))

The resulting tf.data.Datasets return (features, labels) pairs, as expected by keras.Model.fit:

train_data.element_spec

valid_data.element_spec

Figure 3: BERT Layer

def create_model():

input_word_ids = tf.keras.layers.Input(shape=(max_seq_length,), dtype=tf.int32,

name="input_word_ids")

input_mask = tf.keras.layers.Input(shape=(max_seq_length,), dtype=tf.int32,

name="input_mask")

input_type_ids = tf.keras.layers.Input(shape=(max_seq_length,), dtype=tf.int32,

name="input_type_ids")

pooled_output, sequence_output = bert_layer([input_word_ids, input_mask, input_type_ids])

drop = tf.keras.layers.Dropout(0.4)(pooled_output)

output = tf.keras.layers.Dense(1, activation= 'sigmoid', name = 'output')(drop)

model = tf.keras.Model(

inputs = {

'input_word_ids': input_word_ids,

'input_mask': input_mask,

'input_type_ids': input_type_ids

},

outputs = output

)

return model

model = create_model()

model.compile(optimizer= tf.keras.optimizers.Adam(learning_rate=2e-5),

loss = tf.keras.losses.BinaryCrossentropy(),

metrics = [tf.keras.metrics.BinaryAccuracy()])

model.summary()

tf.keras.utils.plot_model(model=model, show_shapes=True, dpi =75)

epochs = 4

history = model.fit(train_data,

validation_data=valid_data,

epochs = epochs,

verbose = 1)

import matplotlib.pyplot as plt

def plot_graphs(history, metric):

plt.plot(history.history[metric])

plt.plot(history.history['val_'+metric], '')

plt.xlabel("Epochs")

plt.ylabel(metric)

plt.legend([metric, 'val_'+metric])

plt.show()

plot_graphs(history, 'binary_accuracy')

plot_graphs(history, 'loss')

sample_examples = ['Are you ashamed of being an Indian?',' you are a racist', ' Its really helpfull, thank you', ' Thanks for you help',]

test_data = tf.data.Dataset.from_tensor_slices((sample_examples, [0]*len(sample_examples)))

test_data = (test_data.map(to_feature_map).batch(1))

preds = model.predict(test_data)

threshold = 0.7

['Insincere' if pred>= threshold else 'Sincere' for pred in preds]